Now you can create a new Google Colaboratory session for training the neural network.

After creating Colaboratory file in your Google Drive, you have to change the runtime type: form Runtime menu select Change runtime type and choose GPU as Hardware accelerator.

STEP 1. Connect the Colab notebook to Google Drive

Execute the following code in a new cell and click on the link to authorize the notebook to access to your Drive

# This cell imports the drive library and mounts your Google Drive as a VM local drive. You can access to your Drive files

# using this path "/content/gdrive/My Drive/"

from google.colab import drive

drive.mount('/content/gdrive')

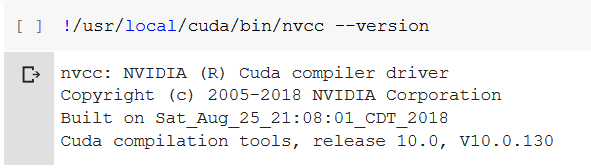

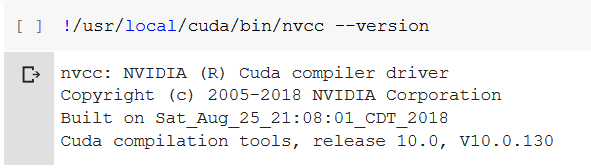

STEP 2. Check CUDA release version

# This cell can be commented once you checked the current CUDA version

# CUDA: Let's check that Nvidia CUDA is already preinstalled and which version is it.

STEP 3. Install cuDNN according to the current CUDA version

You need to download cuDNN from Nvidia website

Since Colab runtime has CUDA 10.0 preinstalled, you need to download cuDNN v7.5.0.56 for CUDA v10.0.

After that, you can upload this file .tgz in your Drive (you can create a folder named darknet_colab

and put in any file related to the training).

Now, you can unzip the cuDNN files

# We're unzipping the cuDNN files from your Drive folder directly to the VM CUDA folders

!tar -xzvf gdrive/My\ Drive//cudnn-10.0-linux-x64-v7.5.0.56.tgz -C /usr/local/

!chmod a+r /usr/local/cuda/include/cudnn.h

# Now we check the version we already installed.

!cat /usr/local/cuda/include/cudnn.h | grep CUDNN_MAJOR -A 2

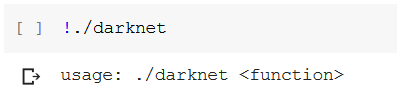

Step 4. Installing Darknet

# Leave this code uncommented on the very first run of your notebook or if you ever need to

recompile darknet again.

# Comment this code on the future runs.

!git clone https://github.com/kriyeng/darknet/

%cd darknet

# Check the folder

!ls

!git checkout feature/google-colab

#Compile Darknet

!make clean

!make

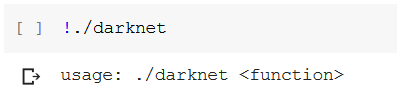

#Check if Darknet runs

!./darknet

You should get the output

Step 5. Training YOLO

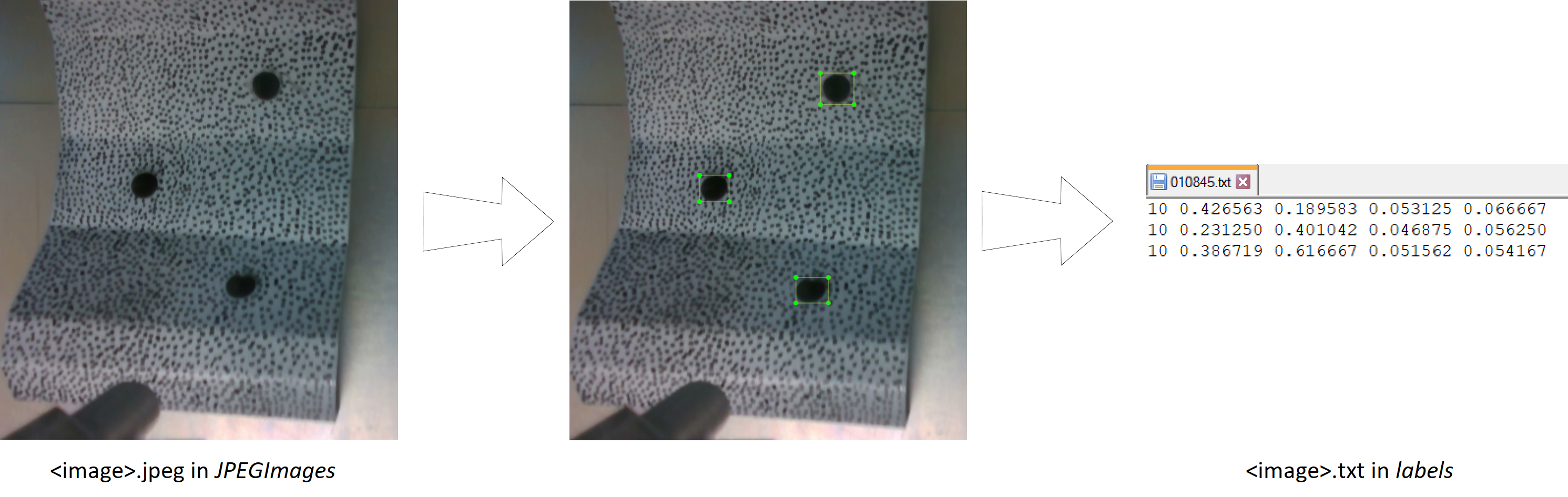

Now, you need to import in Colab from your local file system, all the files you created in the first part of this tutorial. The instructions for doing this are

from google.colab import files

files.upload()

You need to import the folder that includes the images, the files train.txt, test.txt,

<name>.data, <name>.names, <my_dataset>.cfg and the weights file that you can use to

start the training (available here)

When you import a file in Colab it will be put in your current directory, so you need to move some in particular

folder with these instructions:

!mv <name>.data cfg/

!mv <name>.names data/

!mv <my_dataset>.cfg cfg/

To import the folders in Colab you need to create compress files, so you have to unzip them now:

!unzip <folder_with_images>.zip

For this folder you have to create a path equals to that expressed in train.txt (test.txt).

The train.txt, test.txt and the first weights files can remain in the principal folder darknet.

And now, you can start the training with darknet

!./darknet detector train cfg/<name>.data cfg/<my_dataset>.cfg darknet53.conv.74 -dont_show -mjpg_port 8090